Datasets and preprocessing

Just like different state-of-the-art mind tumor segmentation fashions [8, 29], the proposed pipeline depends on multimodal 4-channel MR photos as inputs. As such, we type our dataset utilizing the 369 three-dimensional (3D) T1-weighted, post-contrast T1-weighted, T2-weighted, and T2 Fluid Attenuated Inversion Restoration (T2-FLAIR) MR picture volumes from the Multimodal Mind Tumor Segmentation Problem (BraTS) 2020 dataset [30,31,32,33,34]. These volumes had been mixed to type 369 3D multimodal volumes with 4 channels, the place the channels characterize the T1-weighted, post-contrast T1-weighted, T2-weighted, and T2-FLAIR photos for every affected person. Solely the coaching set of the BraTS dataset was used as a result of it’s the just one with publicly accessible floor truths.

The pictures had been preprocessed by first cropping every picture and segmentation map utilizing the smallest bounding field which contained the mind, clipping all non-zero depth values to their 1 and 99 percentiles to take away outliers, normalizing the cropped photos utilizing min-max scaling, after which randomly cropping the photographs to fastened patches of dimension (128 instances 128) alongside the coronal and sagittal axes, as performed by Henry et al. [5] and Wang et al. [35] of their work with BraTS datasets. The 369 accessible affected person volumes had been then cut up into 295 (80%), 37 (10%), and 37 (10%) volumes for the coaching, validation, and take a look at cohorts, respectively.

The 3D multimodal volumes had been then cut up into axial slices to type multimodal 2-dimensional (2D) photos with 4 channels. After splitting the volumes into 2D photos, the primary 30 and final 30 slices of every quantity had been eliminated, as performed by Han et al. [36] as a result of these slices lack helpful info. The coaching, validation, and take a look at cohorts had 24635, 3095, and 3077 stacked 2D photos, respectively. For the coaching, validation, and take a look at cohorts, respectively; 68.9%, 66.3%, and 72.3% of photos had been cancerous. The pictures will likely be known as (X = {x_1, x_2, …, x_N} in mathbb {R}^{N, 4, H, W}), the place N is the variety of photos, (H=128), and (W=128). Floor truths for every slice (y_k) had been assigned 0 if the corresponding true segmentations had been empty, and 1 in any other case.

To evaluate generalizability, we additionally ready the BraTS 2023 dataset [30,31,32, 34, 37] to be used as an exterior take a look at cohort throughout analysis. To take action, we eliminated information from the BraTS 2023 dataset that appeared within the BraTS 2020 dataset, preprocessed the photographs as was performed for the photographs within the BraTS 2020 dataset, after which extracted the cross-section with the biggest tumor space from every affected person. This resulted in 886 photos from the BraTS 2023 dataset.

Proposed weakly supervised segmentation methodology

We first educated a classifier mannequin to establish whether or not a picture accommodates a tumor, then generated localization seeds from the mannequin utilizing Randomized Enter Sampling for Clarification of Black-box Fashions (RISE) [38]. The localization seeds used the classifier’s understanding to assign every pixel within the photos to one in every of three classes. The primary, known as constructive seeds, point out areas of the picture with a excessive chance of containing a tumor. The second, known as destructive seeds, point out areas with a low chance of containing a tumor. The ultimate class, known as unseeded areas, correspond to the remaining areas of the photographs and indicated areas of low confidence from the classifier. This resulted in constructive seeds that undersegment the tumor, and destructive seeds that undersegment the non-cancerous areas. Assuming that the seeds had been correct, these seeds simplified the duty of classifying all of the pixels within the picture to classifying all of the unseeded areas within the picture, and supplied a previous on picture options indicating the presence of tumors. The seeds had been used as pseudo-ground truths to concurrently practice each a superpixel generator and a superpixel clustering mannequin which, when used collectively, produced the ultimate refined segmentations from the chance warmth map of the superpixel-based segmentations. Utilizing undersegmented seeds, fairly than seeds that try to exactly replicate the bottom truths, elevated the appropriate margin of error and decreased the danger of gathered propagation errors.

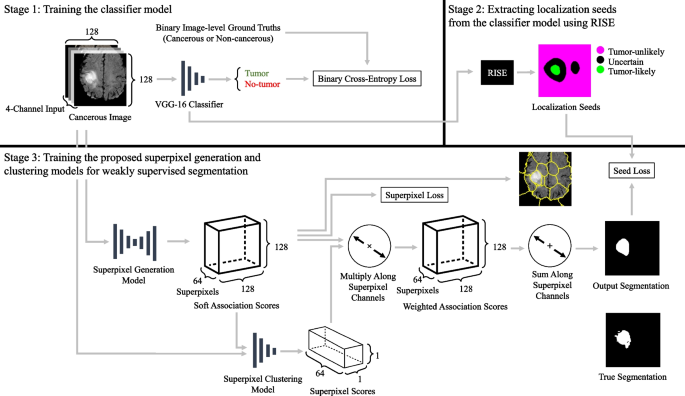

A flowchart of the proposed methodology is offered in Fig. 1. We selected to make use of 2D photos over 3D photos as a result of changing 3D MR volumes to 2D MR photos yields considerably extra information samples and reduces reminiscence prices. Many state-of-the-art fashions resembling SAM and MedSAM use 2D photos [27, 28], and former work demonstrated that mind tumors may be successfully segmented from 2D photos [39].

Flowchart of proposed weakly supervised segmentation methodology. For the localization seeds part; inexperienced signifies constructive seeds, magenta signifies destructive seeds, black signifies unseeded areas. Stable traces characterize use as inputs and outputs. The superpixel era mannequin makes use of a totally convolutional AINet structure [40] and outputs every pixel’s affiliation with every of the 64 potential superpixels. The superpixel clustering community makes use of a ResNet-18 structure and outputs a rating for every of the 64 superpixels indicating the chance that every superpixel accommodates a tumor. The labels used to coach the strategy are binary image-level labels which point out the presence or absence of tumors

Stage 1: Coaching the classifier mannequin

The classifier mannequin was educated to output the chance that every (x_k in X) accommodates a tumor, the place (X = {x_1, x_2, …, x_N} in mathbb {R}^{N, 4, H, W}) is a set of mind MR photos, and N is the variety of photos in X. Previous to being enter to the classifier, the photographs had been upsampled by an element of two. The pictures weren’t upsampled for every other mannequin within the proposed methodology. This classifier mannequin was educated utilizing (Y = {y_1, …, y_N}) as the bottom truths, the place (y_k) is a binary label with a worth of 1 if (x_k) accommodates tumor and 0 in any other case. The methodology is impartial of the classifier structure, and thus, different classifier architectures can be utilized as a substitute.

Stage 2: Extracting localization seeds from the classifier mannequin utilizing RISE

RISE is a technique proposed by Petsiuk et al. that generates warmth maps indicating the significance of every pixel in an enter picture for a given mannequin’s prediction [38]. RISE first creates quite a few random binary masks that are used to perturb the enter picture. RISE then evaluates the change within the mannequin prediction when the enter picture is perturbed by every of the masks. The change in mannequin prediction at every perturbed pixel is then gathered throughout all of the masks to type the warmth maps.

We utilized RISE to our classifier to generate warmth maps (H_{rise} in mathbb {R}^{N, H, W}) for every of the photographs. The warmth maps point out the approximate chance for tumors to be current at every pixel. These warmth maps had been transformed to localization seeds by setting the pixels equivalent to the highest 20% of values in (H_{rise}) as constructive seeds, and setting the pixels equivalent to the underside 20% of values as destructive seeds. (S_+ = {s_{+_1}, s_{+_2}, …, s_{+_{N}}} in mathbb {R}^{N, H, W}) is outlined as a binary map indicating constructive seeds and (S_- = {s_{-_1}, s_{-_2}, …, s_{-_{N}}} in mathbb {R}^{N, H, W}) is outlined as a binary map indicating destructive seeds. Any pixel not set as both a constructive or destructive seed was thought-about unsure. As soon as all of the seeds had been generated, any photos thought-about wholesome by the classifier had their seeds changed by new seeds. These new seeds didn’t embody any constructive seeds and as a substitute set all pixels as destructive seeds, which minimized the danger of inaccurate constructive seeds from wholesome photos inflicting propagation errors.

Stage 3: Coaching the proposed superpixel era and clustering fashions for weakly supervised segmentation

The superpixel era mannequin and the superpixel clustering mannequin had been educated to output the ultimate segmentations with out utilizing the bottom fact segmentations. The superpixel era mannequin assigns (N_S) gentle affiliation scores to every pixel, the place (N_S) is the utmost variety of superpixels to generate, which we set to 64. The affiliation maps are represented by (Q = {q_1, …, q_{N}} in mathbb {R}^{N, N_S, H, W}), the place N is the variety of photos in X, and (q_{ok, s, p_y, p_x}) is the chance that the pixel at ((p_y, p_x)) is assigned to the superpixel s. Gentle associations could end in a pixel having related associations to a number of superpixels. The superpixel clustering mannequin then assigns superpixel scores to every superpixel indicating the chance that every superpixel represents a cancerous area. The superpixel scores are represented by (R = {r_1, …, r_{N}} in mathbb {R}^{N, N_S}) the place (r_{ok, s}) represents the chance that superpixel s accommodates a tumor. The pixels can then be gentle clustered right into a tumor segmentation by performing a weighted sum alongside the superpixel affiliation scores utilizing the superpixel scores as weights. The results of the weighted sum is the chance that every pixel belongs to a tumor segmentation based mostly on its affiliation with strongly weighted superpixels.

The superpixel generator takes enter (x_k) and outputs a corresponding worth (q_k) by passing the direct output of the superpixel era mannequin by way of a SoftMax operate to rescale the outputs from 0 to 1 alongside the (N_s) superpixel associations. The clustering mannequin receives a concatenation of (x_k) and (q_k) as enter, and the outputs of the clustering mannequin are handed by way of a SoftMax operate to yield superpixel scores R. Heatmaps (H_{spixel_+} in mathbb {R}^{N, H, W}) that localize the tumors may be acquired from Q and R by multiplying every of the (N_S) affiliation maps in Q by their corresponding scores R, after which summing alongside the (N_S) channels as proven in (1). The superpixel generator structure relies on AINet proposed by Wang et al. [40], which is a FCN-based superpixel segmentation mannequin that makes use of a variational autoencoder. The innovation launched by AINet is the affiliation implantation module which improves superpixel segmentation efficiency by permitting the mannequin to instantly understand the associations between pixels and their surrounding candidate superpixels. We altered AINet, which outputs native superpixel associations, to output world associations as a substitute in order that Q may very well be handed into the superpixel clustering mannequin. This allowed the generator mannequin to be educated in tandem with the clustering mannequin. Two completely different loss features had been used to coach the superpixel era and clustering fashions. The primary loss operate, (L_{spixel_+}), was proposed by Yang et al. [25] and minimizes the variation in pixel intensities and pixel positions in every superpixel. This loss is outlined in (2), the place p represents a pixel’s coordinates starting from (1, 1) to (H, W), and m is a coefficient used to tune the dimensions of the superpixels, which we set as (frac{3}{160}). We chosen this worth for m by multiplying the worth advised by the unique work, (frac{3}{16000}) [25], by 100 to realize the specified superpixel dimension. (l_s) and (u_s) are the vectors representing the imply superpixel location and the imply superpixel depth for superpixel s, respectively. The second loss operate, (L_{seed}), is a loss from the Seed, Develop, and Constrain paradigm for weakly supervised segmentation. This loss was designed to coach fashions to output segmentations that embody constructive seeded areas and exclude destructive seeded areas [41]. This loss is outlined in (1)-(4) the place C signifies whether or not the constructive or destructive seeds of a picture (s_k) is being evaluated. These losses, when mixed collectively, encourage the fashions to account for each the localization seeds S and the pixel intensities. This ends in (H_{spixel_+}) localizing the unseeded areas that correspond to the pixel intensities within the constructive seeds. The mixed loss is offered in (5), the place (alpha) is a weight for the seed loss. The output (H_{spixel_+}) can then be thresholded to generate ultimate segmentations (E_{spixel_+} in mathbb {R}^{N, H, W}).

Whereas the superpixel era and clustering fashions had been educated utilizing all photos in X, throughout inference the photographs predicted to be wholesome by the classifier had been assigned empty output segmentations.

$$start{aligned} H_{{spixel_+}_k} = sum _{s in N_s} Q_{ok,s} R_{ok,s} finish{aligned}$$

(1)

$$start{aligned} L_{spixel} = frac{1}{N} sum _{ok=1}^{N} sum _p left( left| sum _{s in N_s} u_s Q_{ok,s}(p) proper| _2 + m left| sum _{s in N_s} l_s Q_{ok,s}(p) proper| _2 proper) finish{aligned}$$

(2)

$$start{aligned} H_{{spixel_-}_k} = 1 – H_{{spixel_+}_k} finish{aligned}$$

(3)

$$start{aligned} L_{seed} = frac{1}{N} sum _{ok=0}^{N – 1} frac{-sum _{C in [+, -]} sum _{i, j in s_{C_k}} log {H_{{spixel_C}_k}}_{i,j}}{sum _{C in [+, -]} left| {s_{C_k}}proper| } finish{aligned}$$

(4)

$$start{aligned} L = L_{spixel} + alpha L_{seed} finish{aligned}$$

(5)

Implementation particulars

For the classifier mannequin, we used a VGG-16 structure [42] with batch normalization, whose output was handed by way of a Sigmoid operate. The classifier was educated to optimize the binary cross-entropy between the output possibilities and the binary floor truths utilizing an Adam optimizer with (beta _1 = 0.9, beta _2 = 0.999, epsilon =1e-8), and a weight decay of 0.1 [43]. The classifier was educated for 100 epochs utilizing a batch dimension of 32. The educational fee was initially set to (5e-4) after which decreased by an element of 10 when the validation loss didn’t lower by (1e-4).

When utilizing RISE, we set the variety of masks for a picture to 4000 and used the identical masks throughout all photos.

For the clustering mannequin, we used a ResNet-18 structure [44] with batch normalization. The superpixel era and clustering fashions had been educated utilizing an Adam optimizer with (beta _1 = 0.9, beta _2 = 0.999, epsilon =1e-8), a weight decay of 0.1. The fashions had been educated for 100 epochs utilizing a batch dimension of 32. The educational fee was initially set to (5e-4), which was halved each 25 epochs. The burden for the seed loss, (alpha), was set to 50.

Analysis metrics

We evaluated the segmentations generated by our proposed weakly supervised segmentation methodology and comparative strategies utilizing Cube coefficient (Cube) and 95% Hausdorff distance (HD95). We additionally evaluated the seeds generated utilizing RISE and seeds generated for different comparative strategies utilizing Cube, HD95, and a metric that we seek advice from as undersegmented Cube coefficient (U-Cube).

Cube is a typical metric in picture segmentation that measures the similarity between two binary segmentations. Cube compares the pixel-wise settlement between the generated and floor fact segmentations utilizing a worth from 0 to 1. 0 signifies no overlap between the 2 segmentations whereas 1 signifies excellent overlap. A smoothing issue of 1 was used to account for division by zero with empty segmentations and empty floor truths.

The Hausdorff distance is the utmost distance amongst all of the distances from every level on the border of the generated segmentation to their closest level on the boundary of the bottom fact segmentations. Due to this fact, Hausdorff distance represents the utmost distance between two segmentations. Nevertheless, Hausdorff distance is extraordinarily delicate to outliers. To mitigate this limitation of the metric, we used HD95 which is the ninety fifth percentile of the ordered distances. HD95 values of 0 point out excellent segmentations whereas larger HD95 values point out segmentations with more and more flawed boundaries. HD95 was set to 0 when both the segmentations/seeds or the bottom truths had empty segmentations.

U-Cube is an alteration to Cube that measures how a lot of the seeds undersegment the bottom truths. We used this measure as a result of our methodology assumes that the seeds undersegment the bottom truths fairly than exactly contouring them. Due to this fact, this measure can be utilized to find out the impression of utilizing undersegmented seeds versus extra oversegmented seeds. A price of 1 signifies that the seeds completely undersegment the bottom truths and a worth of 0 signifies that the seed doesn’t have any overlap with the bottom fact. A smoothing issue of 1 was additionally used for the U-Cube. The equation for Cube is offered in Eq. 6 and the equation for U-Cube is offered in Eq. 7, the place A is the seed or proposed segmentation and B is the bottom fact.

$$start{aligned} textual content {Cube} = frac A cap B B finish{aligned}$$

(6)

$$start{aligned} textual content {U-Cube} = left{ start{array}{ll} 0 & textual content {if} | A | = 0, | B |> 0 frac A cap B + 1 & textual content {in any other case} finish{array}proper. finish{aligned}$$

(7)