Rising analysis means that GPT-4 could have a job in enhancing accuracy and streamlining evaluations of radiology studies.

For the retrospective examine, not too long ago revealed in Radiology, researchers in contrast the efficiency of GPT-4 (OpenAI) to the efficiency of six radiologists of various expertise to detect errors (starting from inappropriate wording and spelling errors to aspect confusion) in 200 radiology studies. The examine authors famous that 150 errors have been intentionally added to 100 of the studies being reviewed.

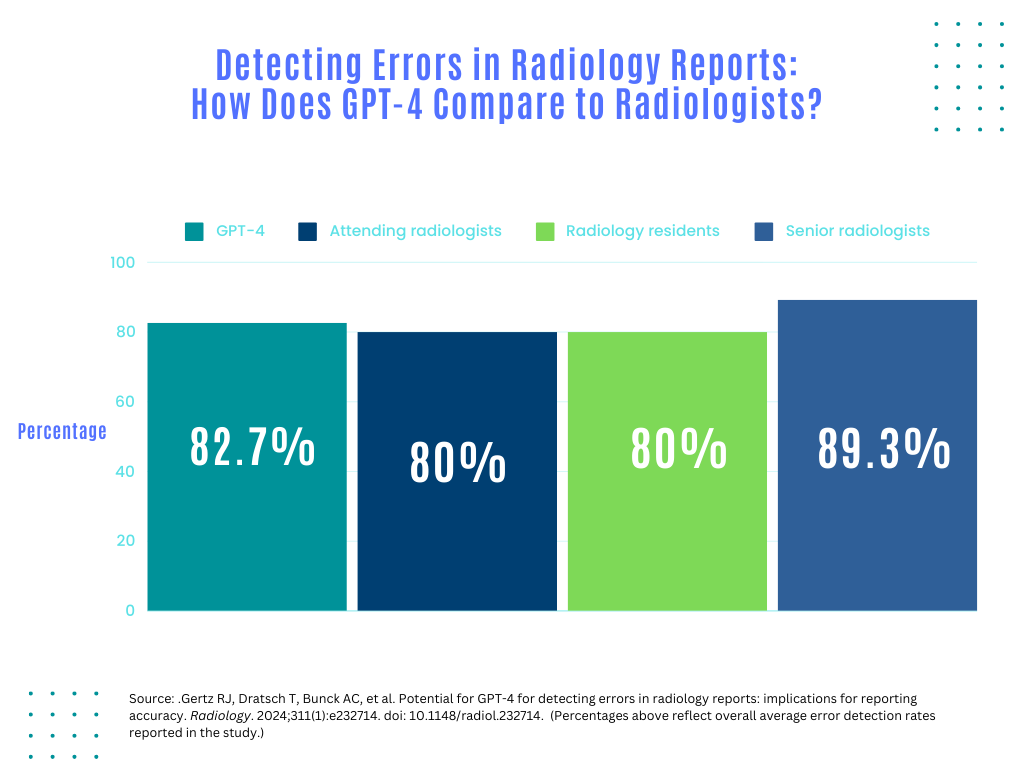

The examine authors discovered that the general common error detection price for GPT-4 evaluations of radiology studies (82.7 %) was similar to these of attending radiologists (80 %) and radiology residents (80 %) however decrease than the typical for 2 reviewing senior radiologists (89.3 %).

The examine authors discovered that the general common error detection price for GPT-4 evaluations of radiology studies (82.7 %) was similar to these of attending radiologists (80 %) and radiology residents (80 %) however decrease than the typical for 2 reviewing senior radiologists (89.3 %).

For radiology studies particular to radiography, GPT-4 had a mean error detection price (85 %) that was similar to senior radiologists (86 %), and considerably increased than that of attending radiologists (78 %) and radiology residents (77 %).

“Our outcomes counsel that enormous language fashions (LLMs) could carry out the duty of radiology report proofreading with a stage of proficiency similar to that of most human readers. (As) errors in radiology studies are prone to happen throughout all expertise ranges, our findings could also be reflective of typical medical environments. This underscores the potential of synthetic intelligence to enhance radiology workflows past picture interpretation,” wrote lead examine writer Roman J. Gertz, M.D., a resident within the Division of Radiology at College Hospital of Cologne in Cologne, Germany, and colleagues.

The researchers famous that GPT-4 was considerably sooner in reviewing radiology studies than radiologists. Compared to the imply studying instances for senior radiologists (51.1 seconds), attending radiologists (43.2 seconds) and radiology residents (66.6 seconds), the examine authors discovered the imply studying time for GPT-4 was 3.5 seconds. In addition they famous a complete studying time of 19 minutes for GPT-4 assessment of 200 radiology studies versus 1.4 hours for the quickest reviewing radiologist.

“GPT-4 demonstrated a shorter processing time than any human reader, and the imply studying time per report for GPT-4 was sooner than the quickest radiologist within the examine … ,” famous Gertz and colleagues.

Three Key Takeaways

- Effectivity enhance. GPT-4 demonstrates considerably sooner studying instances in comparison with human radiologists, highlighting its potential to streamline radiology report evaluations and enhance workflow effectivity. Its imply studying time per report was notably sooner than even the quickest radiologist within the examine, suggesting substantial time-saving advantages.

- Comparable error detection. GPT-4 displays a comparable price of error detection to attending radiologists and radiology residents for general errors in radiology studies. This means that enormous language fashions (LLMs) like GPT-4 can carry out the duty of radiology report proofreading with a stage of proficiency similar to skilled human readers, probably decreasing the burden on radiologists.

- Assorted error detection proficiency: Whereas GPT-4 performs properly in detecting errors of omission, it exhibits decrease proficiency in figuring out circumstances of aspect confusion in comparison with senior radiologists and radiology residents. Understanding these nuances in error detection could assist optimize the mixing of AI instruments like GPT-4 into radiology workflows.

Whereas GPT-4 had a comparable price of error detection to senior radiologists and superior charges of error detection to radiology residents and attendings for errors of omission, the researchers famous that GPT-4 had considerably decrease common detection in circumstances of aspect confusion (78 %) compared to senior radiologists (91 %) and radiology residents (89 %).

For radiology studies involving computed tomography (CT) or magnetic resonance imaging (MRI), the examine authors discovered that GPT-4 had a comparable common detection price (81 %) to attending radiologists (81 %) and radiology residents (82 %), however 11 % decrease than that of senior radiologists (92 %).

In an accompanying editorial, Howard P. Forman, M.D., M.B.A., famous that exploring the utility of assistive applied sciences is crucial so as to commit extra focus to training on the “high of our license” in an surroundings of declining reimbursement and escalating imaging quantity. Nonetheless, Dr. Forman cautioned towards over-reliance on LLMs.

“After assessment by the imperfect LLM, will the radiologist spend the identical time proofreading? Or will that radiologist be comforted in understanding that almost all errors had already been discovered and do a extra cursory assessment? May this inadvertently result in worse reporting?” questioned Dr. Forman, a professor of radiology, economics, public well being, and administration at Yale College.

(Editor’s word: For associated content material, see “Can ChatGPT and Bard Bolster Choice-Making for Most cancers Screening in Radiology?,” “Can ChatGPT be an Efficient Affected person Communication Device in Radiology?” and “Can ChatGPT Go a Radiology Board Examination?”)

In regard to review limitations, the examine authors conceded that the intentionally launched errors don’t replicate the number of errors that could be present in radiology studies. In addition they instructed that the experimental nature of the examine could have contributed to the next error detection price as a result of a Hawthorne impact by which the examine members altered their evaluations of radiology studies on condition that they have been being noticed. The researchers acknowledged their evaluation of doable value financial savings with using GPT-4 to assessment radiology studies doesn’t replicate the prices of integrating a big language mannequin into present radiology workflows.